Choosing Your Stack

The following content is recommended.

Core principles

The most important mindset to adopt before talking about specific technologies, is to think about who your users are and how they will be accessing the service you're building. This should be the primary driver in your decision making, because the experiences you're building are for the users. The kind of questions you can ask yourself are:

- How will my users be accessing the service? What devices will they be using? Will it predominantly be mobile? Do I have data to show what the distribution looks like?

- Where are my users located geographically?

- What kind networks are my users on when accessing the service? If it's mostly cellular, can I estimate what their network conditions are like based on their location?

Thinking about the kind of service you're building can also help ask the above questions. Some questions you can ask yourself are:

- What is the value I'm providing with this service?

- How long will my users be interacting with my service per session? Will they have deep sessions or shallow ones?

- Or, will the type of session my users have depend on their particular journey within my service?

It might be challenging to get concrete answers to these questions if you're building a brand new experience, but you can look at what your nearest competitor is doing. Largely speaking, most new experiences built today are a recycling of experiences built in the past. If in the rare case you're building something truly novel, then thinking about your users—and the experience you'd like them to have—in these terms can help you orient yourself towards more appropriate solutions.

It might seem daunting going through this exercise if you expect one technology or approach to fall out of this calculus. It's important to realise the fact that there are no one-size-fits-all solutions and that there are always trade-offs with every approach. This exercise will bring you closer to understanding what trade-offs are worth making while not hurting the user experience. When you shoehorn one solution to solve problems it wasn't designed to, it usually ends up hurting both your users and your service. You might be able to deliver a few things that work in the short run, but over time the added complexity will hurt unless you also invest in managing this complexity on top of managing your service. However, in resource-constrained environments, this additional investment will become harder and harder to justify, especially if you can't clearly demonstrate it's value beyond it being a one-size-fits-all.

Session depth

Session depth is the number of interactions in an application. Interactions can include:

- Clicking with a mouse

- Tapping on a touchscreen

- Pressing a key on a physical or virtual keyboard

- Scrolling on a page

To understand your session depths, the interactions you should consider closely are the ones driven by your application logic. For example, when your page is longer than what fits in the viewport, the browser automatically handles scrolling. You don't need to write any application logic to handle it and therefore you don't need to count it towards your overall session depth. The same goes for form input fields. Clicking into a field is an action you need to count because you coded your application to have a form field, but you don't need to count every single key press because the browser handles accepting input and rendering it on the screen. However, if you write code to handle any of those interaction (e.g., scroll-jacking or running logic on input) then you should count it towards your overall session depth. Naturally then, the pro move would be to offload handling interactions to the platform as much as possible, so you're only on the hook for the few of them that are essential to the experience you're delivering.

Different tools give you slightly different numbers based on what they counts as interactions, so you can use that in conjunction with the Core Web Vital Interaction to Next Paint (INP) to monitor how interactions on your application feels to your user. For example, if the number of actions Datadog RUM (Datadog RUM only counts clicks as actions) has counted in your session is relatively low per session, but your INP score is quite high, then you can draw two different conclusions from it:

- You have too much JavaScript driving the interactions Datadog RUM recorded

- You have gaps in your measurement for what you should be counting as an interaction

In summary, what counts as an interaction to measure session depth are things that we write code to handle. For example, if we were to measure the interactions on a session that looked like this:

- User types in the url, or clicks on a link to get to our site (1 interaction)

- User reads the content on our site, and scrolls to the bottom (0 interactions)

- User clicks on a link on our site and goes elsewhere (1 interaction)

We count the first one because once they click on it, we’re responsible for loading all of the page and all its resources (HTML, CSS, JS, images and fonts).

Further reading

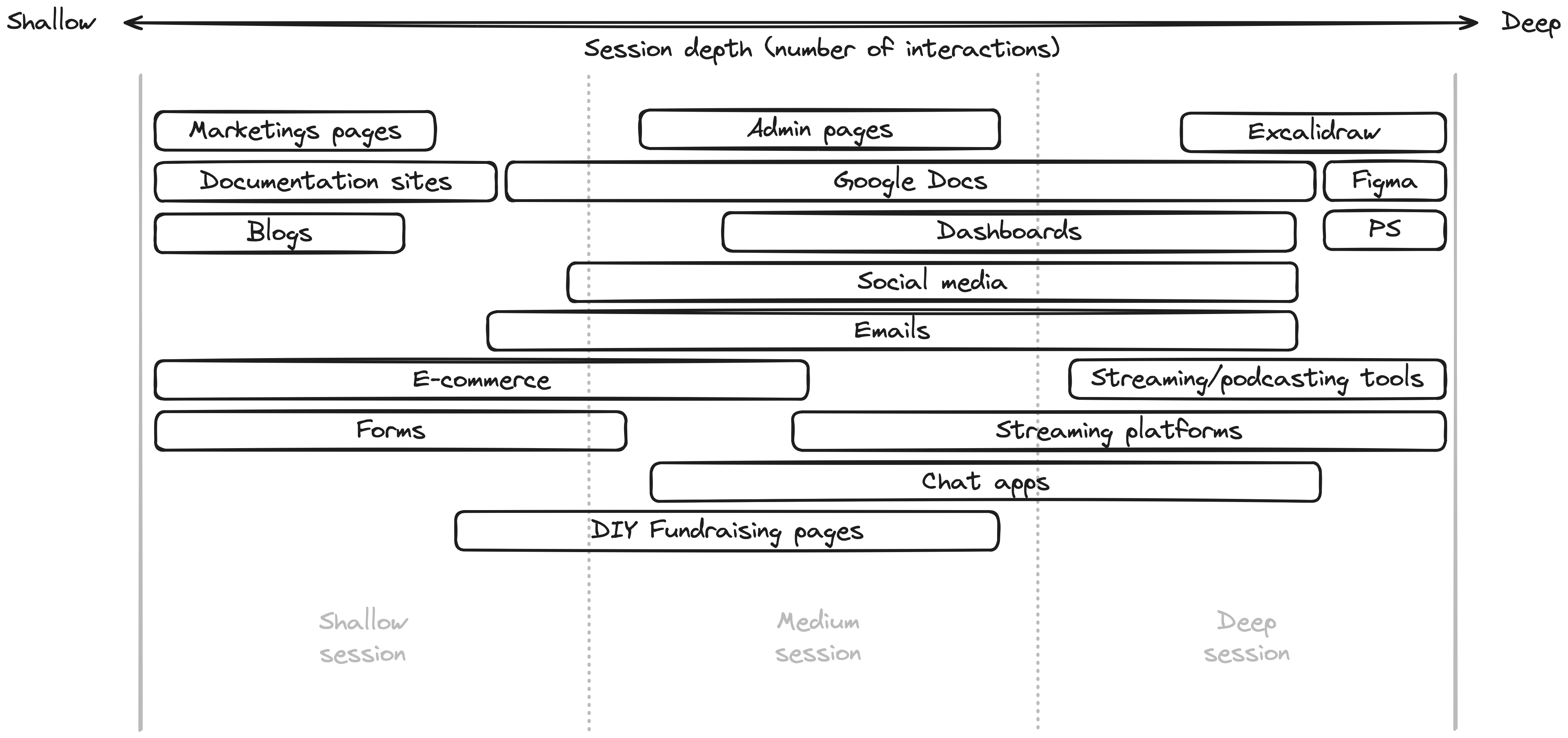

Examples of applications and their session depths

Examples of application types with shallow sessions:

- Blogs and marketing pages

- Simple forms

Examples of application types with deep sessions:

- Word processors

- Editors

- Games

Examples of application that have a multimodal distribution:

- Blogging platform: An application like Wordpress is both a rich editor application and a simple static site, so the two humps in your distribution would correspond to either your blog editors or your site visitors.

- Email: Some users might open the mail client to quickly view a single email and some might be setting up their daily driver to process hundreds of them.

Thinking in terms of statistical distributions means you can make better decisions for your users. For example, even if your blog site has a lot of interaction opportunities (i.e., call to actions, interactive demos, widgets), but your analytics data tells you that 75% of users have fewer than 4 interactions, then does it make sense to use a solution that would be optimal for 25% of your users but hurt the remaining 75%? If that's justifiable from a product perspective, then go for it. If not, then you'll need to flip who you're optimising for so you're not failing by default.

Here are a range of applications roughly mapped by their session depths:

Some of them have really wide ranges because they can have multimodal distributions or there are examples in the wild that would place them at either end of the spectrum. For example, forms to sign up for a newsletter with just your email address is super shallow, whereas forms to apply for insurance could be quite deep.

Further reading

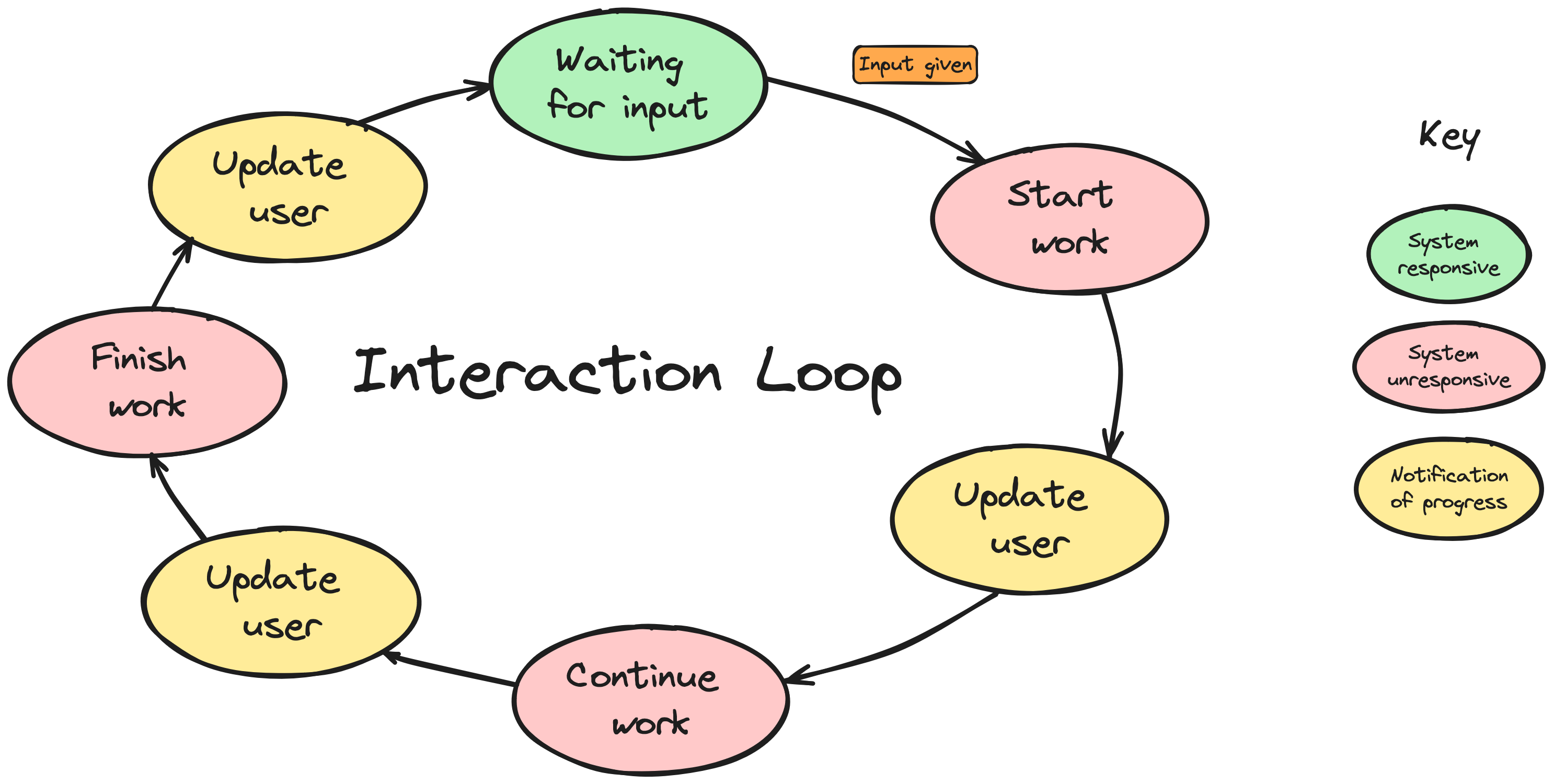

Thinking about a single interaction

This interaction loop describes both of the following scenarios:

- Clicking a link and loading a new page

- Clicking a button that fires a fetch request and then constructing new UI based on the response on the same page

In the first scenario, the interaction requires a full server round trip for a new page and it doesn't feel seamless, but it is entirely handled by the platform. In the second scenario, the interaction needs to be handled by the application by maintaining a local data model and some machinery to convert the fetched response into DOM nodes, but it might feel more seamless since an entirely new page doesn't need to be loaded. However, this seamlessness comes at a cost of increased client-side complexity, and whether that's a price worth paying depends on how your users behave in your application.

Choice Framework

Now that you understand your users and know the kind of application you'll be building, you can ask more specific questions that will take you towards a type of solution that'd be suitable. The underlying premise here is that there's no one-size-fits-all solution, and you need to weigh that up with what's right for your users and service. The two extreme ends of the spectrum your user experience can fall between is:

- Every interaction requires a full server round trip

- Every interaction is handled locally with a local data model that synchronises with the server

The reason that we're framing things in this ways is because it recognises that the frontend cannot exist in isolation of the backend, and it gets us to start thinking about what costs can we afford. Based on what we've seen with session depth so far, we can begin to correlate the two ideas like this:

- If you have shallow sessions, then the simplicity of a full server round trip far outweighs the user experience gains of handling the interaction locally.

- If you have deep sessions, then additional complexity might be warranted to mitigate the user experience impact of full server round trips.

What are the costs involved in building a website or web application? There are costs that the user absorbs and these are HTML, CSS, JavaScript, third-party scripts, fonts, images and other assets. There are also costs that you as the provider of the service directly deal with. These are attributed to how you store and serve your assets, and also how you produce them. Ultimately, you're responsible for all the costs even if you don't directly pay for it. Some questions you can ask yourself to keep it grounded are:

- What costs am I paying?

- Who am I paying them for?

- What am I getting out of it?

Not all resources are weighted equally. JavaScript is byte-for-byte at least 3 times more expensive than HTML and CSS.

If you just look at the total cost the user absorbs, then an application with deep sessions that handles interactions locally will always be higher than a simple blog. This makes sense, but it doesn't tell you if those costs are justifiable in balancing between user experience and the cost of resources. To make this feel more fair, let's amortise the cost like this:

Amortised cost = Resources required / Session depth

This will average the cost of the resources over the application, which gives you a much better picture of what you can afford. This exercise of amortising cost helps you justify the resources required to deliver your experience. For example, if your interactive editor application takes a long time to load, you can justify it because the experience once it's loaded benefits from the reduced network latency between interactions and everything feels snappy. Because not all applications fall neatly at either end of the complexity spectrum, some fundamental techniques that can help are:

- Progressive enhancement: This lets you slowly add more complexity where you need it without hurting the baseline experience.

- Multi-architecture applications: Examples of this approach can be using different stacks to build your website vs the CMS you build to manage it. And it can also be using different architectures within a single application like using a local-first approach for your editor, but using a simple HTML form to update user's profile page.

Bake Offs

A bake off, or a collection of proof-of-concepts, can be an effective way to filter through the noise of the current trending tools and approaches. This can be a useful exercise in trying to determine the specific approach, tool or technology that's right for you and your users. The aim of this process is shifting the analysis of stack complexity in the context of your users left, so you don't go down a path you can't easily come back from. This is going to cost you a lot less in the long run to establish some pro-user and pro-marginal-user metrics to judge your service against, rather than picking an arbitrary approach that isn't fit for purpose. That style of construction will mean you're taking on tech debt and owning unnecessary complexity from the start.

Further reading

- "Towards a Unified Theory of Web Performance" by Alex Russell (2022)

- "A Management Maturity Model for Performance" by Alex Russell (2022)

- "htmx: a new old way to build the web" by JS Party (2024)

- GDS Service Manual: Choosing Technology

- GDS Service Manual: Progressive Enhancement

- GDS Why we focus on frontend performance

- GDS Why we use progressive enhancement to build GOV.UK

- GDS Service Manual: How to test frontend performance

- GDS Service Manual: Designing for different browsers and devices

- GDS The Technology Code of Practice